AI tools like Lovable.dev are changing app development, enabling rapid prototyping and giving the power to everybody to create functional applications through natural language prompts.

These tools are 20x faster to code than a developer, but they also introduce unique challenges in testing, debugging, and maintaining the generated applications. When you add AI to the team, you need to be vigilant.

Let’s explore below some challenges, and common scenarios that can happen and how you can test and identify them.

If you want to be able to use the code as a boilerplate and escalate the product after, don’t add 300 features before checking and testing it! AI creates hundreds lines of code making it harder and harder to review and maintain, test and check the code early as possible.

Also be aware, they will use whatever library they think is the best or they have partnership with. (Example: Lovable.dev will push you to use supabase) and some of these libraries/tools might not be the best/cheaper for your product (Check subscription prices). These AI tools might use libraries that are deprecated creating a conflict with other dependencies as you scale, introducing other bugs.

If you want to just test the market, prototype and you are completely okay to might have this MVP rewritten from the scratch then no need to worry about too much.

Common Challenges in Testing AI Coded Apps

1. Code Quality and Optimisation

Scenario: An e-commerce startup uses Lovable.dev to build a shopping platform. The generated code includes a product listing feature but contains redundant database queries that degrade performance.

Generated Code Example:

// Generated by AI

let products = [];

for (let productId of productIds) {

let product = db.query(`SELECT * FROM products WHERE id = ${productId}`);

products.push(product);

}

Issue: The code queries the database inside a loop, resulting in multiple queries for a single operation.

If you only had a happy test scenario you wouldn’t be able to catch this one, so in this case you will need to actively check the database and it’s performance.

2. Limited Customization and Flexibility

Scenario: A nonprofit organization creates an event management app. The app’s AI-generated code fails to include the functionality to calculate the carbon footprint of events.

Generated Code Example:

// Generated by AI

events.forEach(event => {

console.log(`Event: ${event.name}`);

});

Issue: The AI didn’t include a custom calculation for carbon emissions.

This is typical, sometimes AI only codes the front-end, some of the interactions between the components, and uses hardcoded the data, but it is unable to create the backend or logic behind if not explicitly asked for and send the formula. This can be catch in a simple happy test scenario with different inputs.

3. Debugging Complexity

Scenario: A small business generates a CRM app with an AI tool. The notification system malfunctions, sending duplicate notifications.

Generated Code Example:

// Generated by AI

reminders.forEach(reminder => {

if (reminder.date === today) {

sendNotification(reminder.userId, reminder.message);

sendNotification(reminder.userId, reminder.message);

}

});

Issue: Duplicate notification logic due to repeated function calls.

Sometimes even AI is able to pick up this one. You know when they suggest to refactor the code ? This one would be easy to catch when doing your happy path scenario, checking if you have received the notification only once.

4. Scalability Concerns

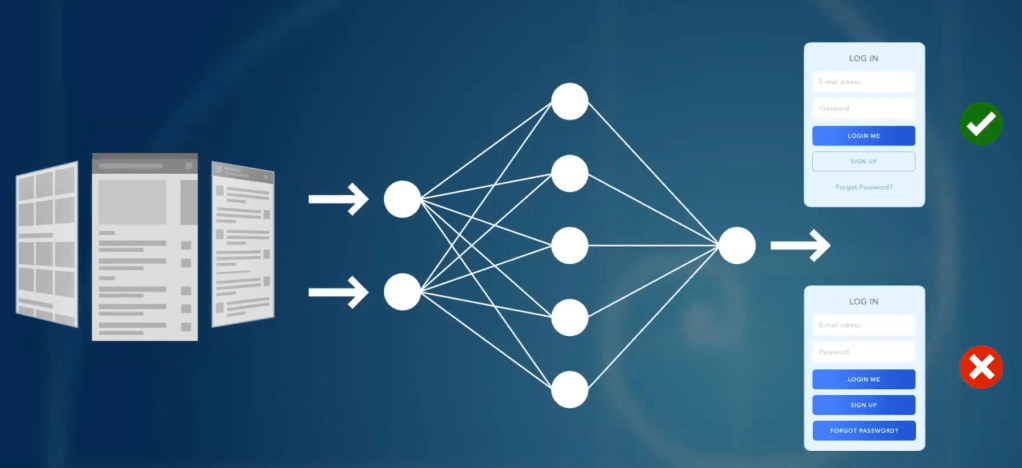

Scenario: A social media startup builds its platform. The AI-generated code fetches user data inefficiently during logins, causing delays as the user base grows.

Generated Code Example:

// Generated by AI

let userData = {};

userIds.forEach(userId => {

userData[userId] = db.query(`SELECT * FROM users WHERE id = ${userId}`);

});

Issue: The loop-based query structure slows down login times for large user bases.

This one could be identified later in the development cycle, unless you are doing performance tests early on. Probably will catch this only when you have a large database of users, easy to fix, but can be fixed before you have this headache.

5. Security Vulnerabilities

Scenario: A healthcare startup generates a patient portal app. The AI-generated code stores sensitive data without encryption.

Generated Code Example:

// Generated by AI

db.insert(`INSERT INTO patients (name, dob, medicalRecord) VALUES ('${name}', '${dob}', '${medicalRecord}')`);

Issue: Plain text storage of sensitive information.

Another typical one for AI coded generated apps, usually they lack on security of the data. Be extra cautious when checking the data transactions and how the data is being managed and stored.

6. Over-Reliance on AI

Scenario: A freelance entrepreneur creates a budgeting app. When a bug arises in the expense tracker, the entrepreneur struggles to debug it due to limited coding knowledge.

Generated Code Example:

// Generated by AI

let expenses = [];

expenseItems.forEach(item => {

expenses.push(item.amount);

});

let total = expenses.reduce((sum, amount) => sum + amount, 0) * discount;

Issue: Misapplied logic causes an incorrect total calculation.

Another one that AI can catch while developing the app, because AI mix back and front end code sometimes is hard to debug even when you are a experienced developer, for someone that doesn’t have coding skills, then the challenge can be a bit more complex. AI can also help you to find the error, and you can catch this one probably not only when deploying, but also when doing your happy path scenario.

Not all AI coding platforms create tests on their own code unless explicitly asked for. Loveable for example don’t create any tests for their code. This is another thing you need to keep in mind when using these tools.

Another point is AI is not really good to keep up to date with all latest technologies, for example: All Blockchains, still not possible to do much, but a matter of time maybe ? These technologies keep changing and evolving every second you breath, AI can’t keep up yet, and humans can’t as well 😂

Some tips to maintain AI Coded Apps

- Conduct Comprehensive Frequent Code Reviews

- Implement Testing Protocols

- Train AI to use Code Best Practices

- Plan for Scalability

- Prioritise Security

- Foster Developer Expertise