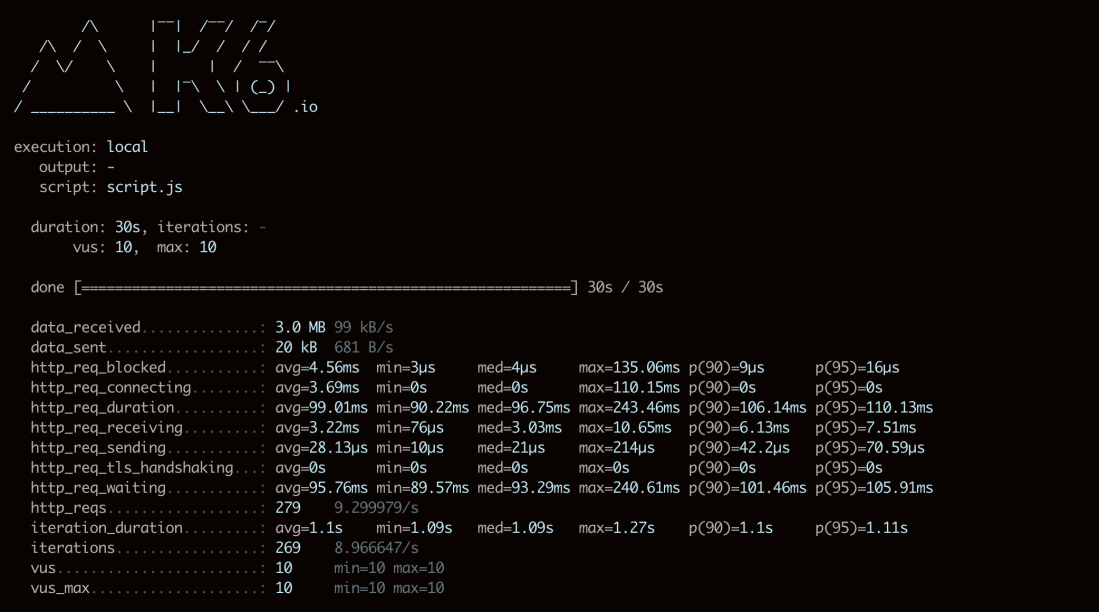

Hello guys, after a long break I am posting about a previous project where I created some performance tests to check the reliability of the server. Artillery is a npm library that helps you with load tests, is very simple to use and the scripts are written in .yml, so make sure the indentation is right.

So in the load-tests.yml file you will find this script:

config:

target: 'https://YOUR-HOST-HERE' //Here you need add your host url

processor: "helpers/pre-request.js" //This is the pre-request function we are using to create the data

timeout: 3 // What is the timeout for each request, it is going to stop the flow and tag the scenario as a failure

ensure:

p95: 1000 // Force artillery to exit with a non-zero code when a condition is not met, useful for CI/CD

plugins:

expect: {}

environments:

qa:

target: "https://YOUR-HOST-HERE-QA-ENV" //Here you need add your QA env url

phases:

- duration: 600 //Duration of the test, in this case 10 minutes

arrivalRate: 2 //Create 2 virtual users every second for 10 minutes

name: "Sustained max load 2/second" //Run performance tests creating 2 users/second for 10 minutes

dev:

target: "https://YOUR-HOST-HERE-DEV-ENV" //Here you need add your Dev env url

phases:

- duration: 120

arrivalRate: 0

rampTo: 10 //Ramp up from 0 to 10 users with constant arrival rate over 2 minutes

name: "Warm up the application"

- duration: 3600

arrivalCount: 10 //Fixed count of 10 arrivals (approximately 1 every 6 seconds):

name: "Sustained max load 10 every 6 seconds for 1 hour"

defaults:

headers:

content-type: "application/json" //Default headers needed to send the requests

scenarios:

- name: "Send User Data"

flow:

- function: "generateRandomData" //Function that we are using to create the random data

- post:

headers:

uuid: "{{ uuid }}" //Variable with value set from generateRandomData function

url: "/PATH-HERE"//Path of your request

json:

name: "{{ name }}"

expect:

- statusCode: 200 //Assertions, in this case we are asserting only the status code

- log: "Sent name: {{ name }} request to /PATH-HERE"

- think: 30 //Wait 30 seconds before running next request

- post:

headers:

uuid: "{{ uuid }}"

url: "/PATH-HERE"

json:

name: "{{ mobile }}"

expect:

- statusCode: 200

- log: "Sent mobile: {{ mobile }} request to /PATH-HERE"

Now, for the function that creates the data you have a Faker library, that you need to install in your package with npm, then you need to export this function. You need to make the variables available using the userContext.vars and remember to always accept the parameters: userContext, events and done, so they can be used in the artillery scripts.

const Faker = require('faker')

module.exports = {

generateRandomData

}

function generateRandomData (userContext, events, done) {

userContext.vars.name = `${Faker.name.firstName()} ${Faker.name.lastName()} PerformanceTests`

userContext.vars.mobile = `+44 0 ${Faker.random.number({min: 1000000, max: 9999999})}`

userContext.vars.uuid = Faker.random.uuid()

userContext.vars.email = Faker.internet.email()

return done()

}

This is just an example, but you can see how powerful and simple artillery is on their website.

You can see the entire project with the endurance and load scripts here: https://github.com/rafaelaazevedo/artilleryExamples

See you guys !